Sorry for the radio silence. It’s been nearly a month, and in that time, I’ve started a new contract, started a podcast, watched world politics nearly implode, and worked on an amazing campaign I hope to share with you soon. All this to say, life has been a bit hectic.

But through all the noise, one thought has been stuck in my head, why does everything in tech feel so… weird right now?

AI-generated video, voice, podcasts, assistants, chat, code, is now everywhere. People are now taking revenge against the lazy AI slop and for good reason. Our brains, for the most part, are incredibly good at detecting it.

It’s the feeling you get watching a viral video of an emotional support kangaroo at an airport, knowing deep down that no such creature has ever cleared customs. Our brains are finely tuned instruments for authenticity. If you feel a sense of unease when looking at a hyper-realistic but flawed digital image, you're likely experiencing the uncanny valley.

This psychological phenomenon, first identified by roboticist Masahiro Mori in 1970, describes that dip in our emotional response when a figure appears almost, but not perfectly, human.

A study presented at the Federation of European Neuroscience Societies (FENS) Forum in 2024 revealed that while people aren't great at consciously distinguishing between real and AI-generated voices, our brains react differently. Human voices tend to activate brain regions associated with memory and empathy, while AI voices trigger areas linked to error detection and attention.

It seems our subconscious is a much better bullshit detector than we are. Our brains are hardwired for pattern recognition, picking up on the minute inconsistencies in rhythm, intonation, and tone that AI still struggles to replicate authentically.

This brings us to a philosophical fork in the road. In one corner, you have the RoboCop approach. We accept that machines are different, visibly so. They are powerful tools, part man, part machine, all cop, but no one is mistaking them for their next door neighbour. In the other corner, you have the Terminator approach. The pursuit of a perfect human replica, an AI wrapped in synthetic skin, designed to be indistinguishable from us. Tech seems hell bent on the latter.

Last year, I sat on a panel judging final year design students' projects. One student had built an automata with the sole purpose of replicating subtle human qualities like breathing and minute facial movements. Watching this doll-like face, which we knew was fake, perform these incredibly subtle human actions was deeply unsettling.

It's a feeling that's hard to pin down, unlike our reaction to the Boston Dynamics robots, which are impressive feats of engineering but are so overtly mechanical that they don't trigger that same eerie feeling and I guess everyone wants to kick them.

This obsession with replicating human qualities feels like a gimmick. When AI assistants are programmed with filler words, a slight cough, or a deliberate pause, it doesn't feel natural. These are affectations, bolted on to make the model feel more "human" because we’re aiming for an AGI that mirrors us.

But what if the human way of thinking is flawed? Why are we pouring millions into building a voice agent that can phone a restaurant and mimic human conversation to book a table, when it could just integrate directly with the booking system's API?

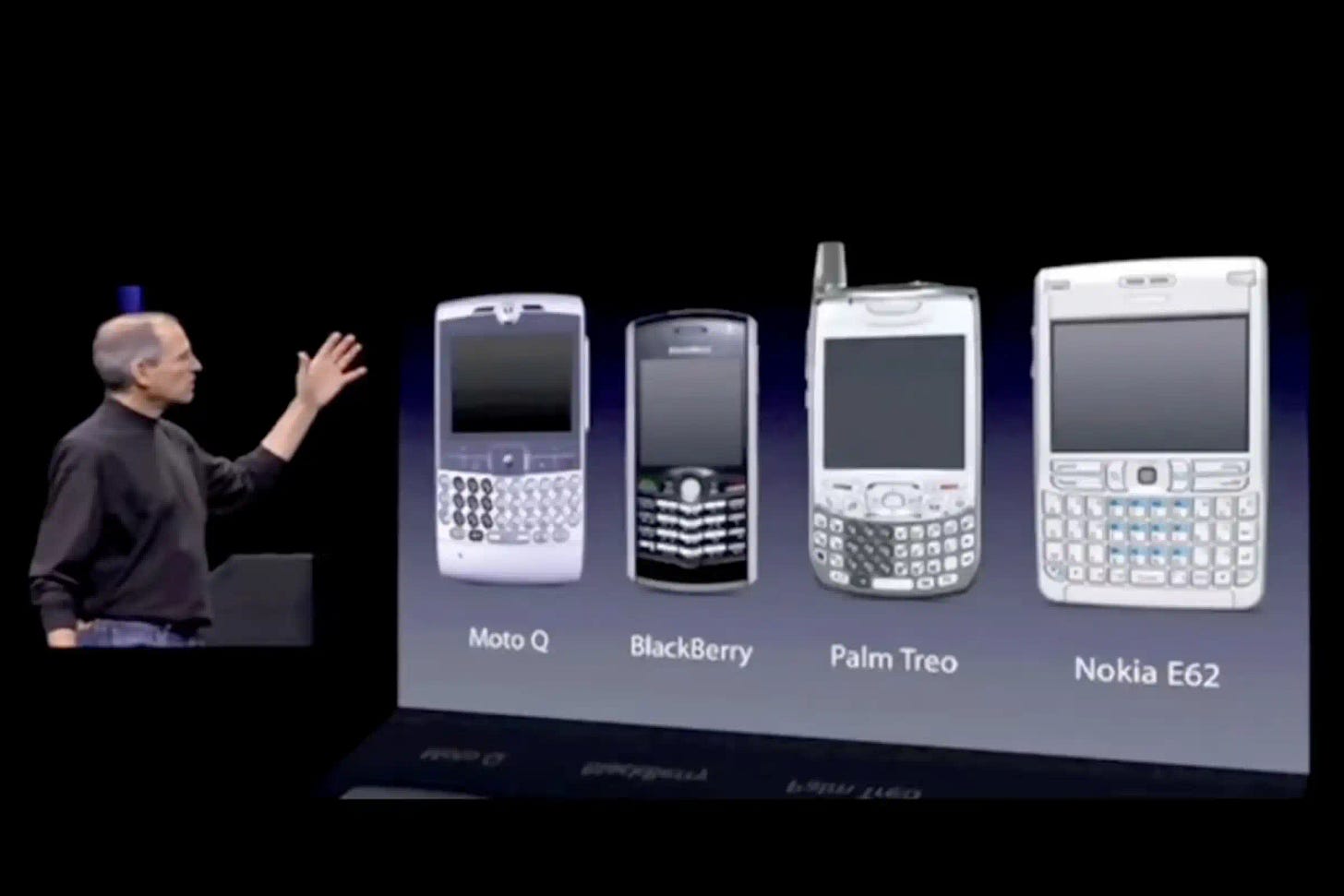

We've built this entire artifice based on how we think the world should work, because that's the only model we know. It’s like pre-iPhone era, when BlackBerry and Nokia were convinced their competitive moat was the physical keyboard. They were designing for a paradigm they understood, unable to see the potential of a completely new interface.

We are living in the keyboard era of AI. Voice assistants and chatbots are our keyboards. We’re on the precipice of the touchscreen moment, but we haven't figured out what it is yet. The closest we’ve come are agentic AI solutions, which can autonomously perform tasks and make decisions.

Yet even these, with their reasoning abilities, can feel more like a pain than a genuine solution. Some labs are even testing AI in simulated work environments, hoping it can learn by replicating a "day in the life." This approach is fundamentally flawed. It's built on mimicry, whereas true human expertise comes from years of complex pattern recognition, not from simulating Slack conversations and scoring points.

The World Economic Forum's Future of Jobs Report 2025 predicts significant disruption, with some forecasts suggesting technology could displace millions of jobs while creating millions of new ones. Essentially learn how to work with these tools, or get left behind is the message.

But the real innovation won't come from building solutions that look and sound like the products we use today. It will happen when we stop trying to create a better human and start building something entirely new, something that leverages the unique predictive and analytical power of these emerging technologies.

In early 2000s we were obsessed with skeuomorphism. The design philosophy of making digital items resemble their real-world counterparts. That was a transitional step to make new technology feel familiar.

We are past that. The goal shouldn’t be to build a perfect digital replica of a flawed human process. It should be to build a process that is natively digital, natively intelligent.

My prediction is that this will be a fundamental shift away from conversational interfaces and towards predictive, autonomous systems that operate on our behalf, without needing to pretend they’re one of us.

The true touchscreen moment for AI will be when it sheds its human-like skin and takes on a form we can’t yet fully imagine, one that is truly, uniquely intelligent.

I agree totally with 'shedding the skin' and I honestly think and have found, AI can't seem to support this. I have tried to look to AI for innovative ideas in UI/UX and whether it's bias or other, it is limited and I have found nothing truly inspiring. Humans will still need to innovate to achieve these breakthroughs. Great article. Really enjoyed..